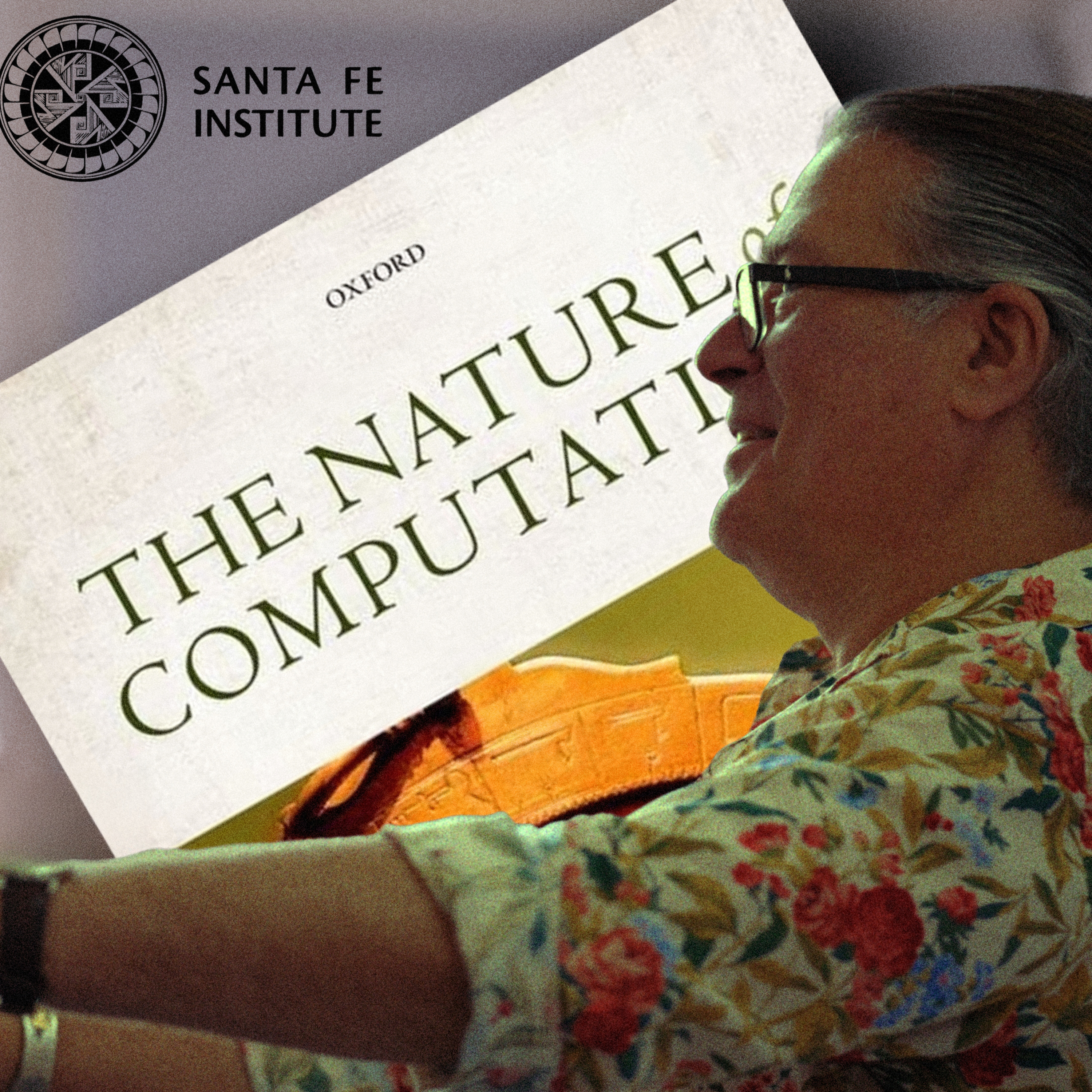

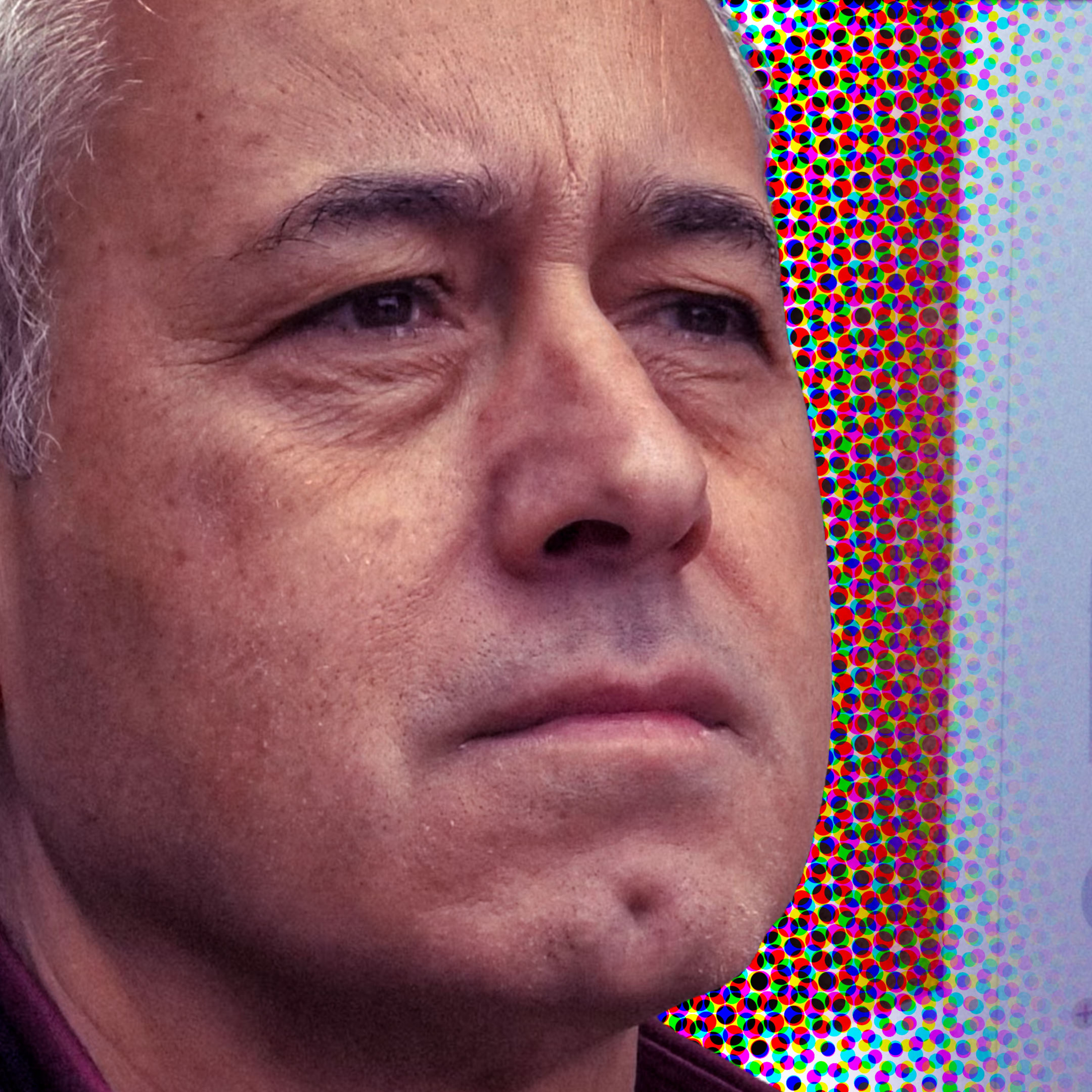

The Day AI Solves My Puzzles Is The Day I Worry (Prof. Cristopher Moore)

Machine Learning Street Talk

Episode Description

<p>We are joined by Cristopher Moore, a professor at the Santa Fe Institute with a diverse background in physics, computer science, and machine learning.</p><p><br></p><p>The conversation begins with Cristopher, who calls himself a "frog" explaining that he prefers to dive deep into specific, concrete problems rather than taking a high-level "bird's-eye view". </p><p><br></p><p>They explore why current AI models, like transformers, are so surprisingly effective. Cristopher argues it's because the real world isn't random; it's full of rich structures, patterns, and hierarchies that these models can learn to exploit, even if we don't fully understand how.</p><p><br></p><p>**SPONSORS**</p><p>Take the Prolific human data survey - https://www.prolific.com/humandatasurvey?utm_source=mlst and be the first to see the results and benchmark their practices against the wider community!</p><p>---</p><p>cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economy.</p><p>Oct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++</p><p>Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlst</p><p>Submit investment deck: https://cyber.fund/contact?utm_source=mlst</p><p>***</p><p><br></p><p>Cristopher Moore:</p><p>https://sites.santafe.edu/~moore/</p><p><br></p><p>TOC:</p><p>00:00:00 - Introduction</p><p>00:02:05 - Meet Christopher Moore: A Frog in the World of Science</p><p>00:05:14 - The Limits of Transformers and Real-World Data</p><p>00:11:19 - Intelligence as Creative Problem-Solving</p><p>00:23:30 - Grounding, Meaning, and Shared Reality</p><p>00:31:09 - The Nature of Creativity and Aesthetics</p><p>00:44:31 - Computational Irreducibility and Universality</p><p>00:53:06 - Turing Completeness, Recursion, and Intelligence</p><p>01:11:26 - The Universe Through a Computational Lens</p><p>01:26:45 - Algorithmic Justice and the Need for Transparency</p><p><br></p><p>TRANSCRIPT: https://app.rescript.info/public/share/VRe2uQSvKZOm0oIBoDsrNwt46OMCqRnShVnUF3qyoFk</p><p><br></p><p>Filmed at DISI (Diverse Intelligences Summer Institute)</p><p>https://disi.org/</p><p><br></p><p>REFS:</p><p>The Nature of computation [Chris Moore]</p><p>https://nature-of-computation.org/ </p><p><br></p><p>Birds and Frogs [Freeman Dyson]</p><p>https://www.ams.org/notices/200902/rtx090200212p.pdf </p><p><br></p><p>Replica Theory [Parisi et al]</p><p>https://arxiv.org/pdf/1409.2722 </p><p><br></p><p>Janossy pooling [Fabian Fuchs]</p><p>https://fabianfuchsml.github.io/equilibriumaggregation/ </p><p><br></p><p>Cracking the cryptic [YT channel]</p><p>https://www.youtube.com/c/CrackingTheCryptic</p><p><br></p><p>Sudoko Bench [Sakana]</p><p>https://sakana.ai/sudoku-bench/</p><p><br></p><p>Fractured entangled representations “phylogenetic locking in comment” [Kumar/Stanley]</p><p>https://arxiv.org/pdf/2505.11581 (see our shows on this)</p><p><br></p><p>The War Against Cliché: [Martin Amis]</p><p>https://www.amazon.com/War-Against-Cliche-Reviews-1971-2000/dp/0375727167</p><p><br></p><p>Rule 110 (CA)</p><p>https://mathworld.wolfram.com/Rule150.html</p><p><br></p><p>Universality in Elementary Cellular Automata [Matt Cooke]</p><p>https://wpmedia.wolfram.com/sites/13/2018/02/15-1-1.pdf </p><p><br></p><p>Small Semi-Weakly Universal Turing Machines [Damien Woods] </p><p>https://tilde.ini.uzh.ch/users/tneary/public_html/WoodsNeary-FI09.pdf </p><p><br></p><p>COMPUTING MACHINERY AND INTELLIGENCE [Turing, 1950]</p><p>https://courses.cs.umbc.edu/471/papers/turing.pdf </p><p><br></p><p>Comment on Space Time as a causal set [Moore, 88]</p><p>https://sites.santafe.edu/~moore/comment.pdf </p><p><br></p><p>Recursion Theory on the Reals and Continuous-time Computation [Moore, 96]</p>

Full Transcript